Performative Prediction

Machine learning systems are increasingly used to support decision-making processes (Fischer-Abaigar et al., 2024). Yet, these systems do not merely reflect the world—they also reshape it. Once deployed, predictions can influence behaviors, alter policies, and redirect resources, creating feedback loops that change the very data-generating processes they aim to model.

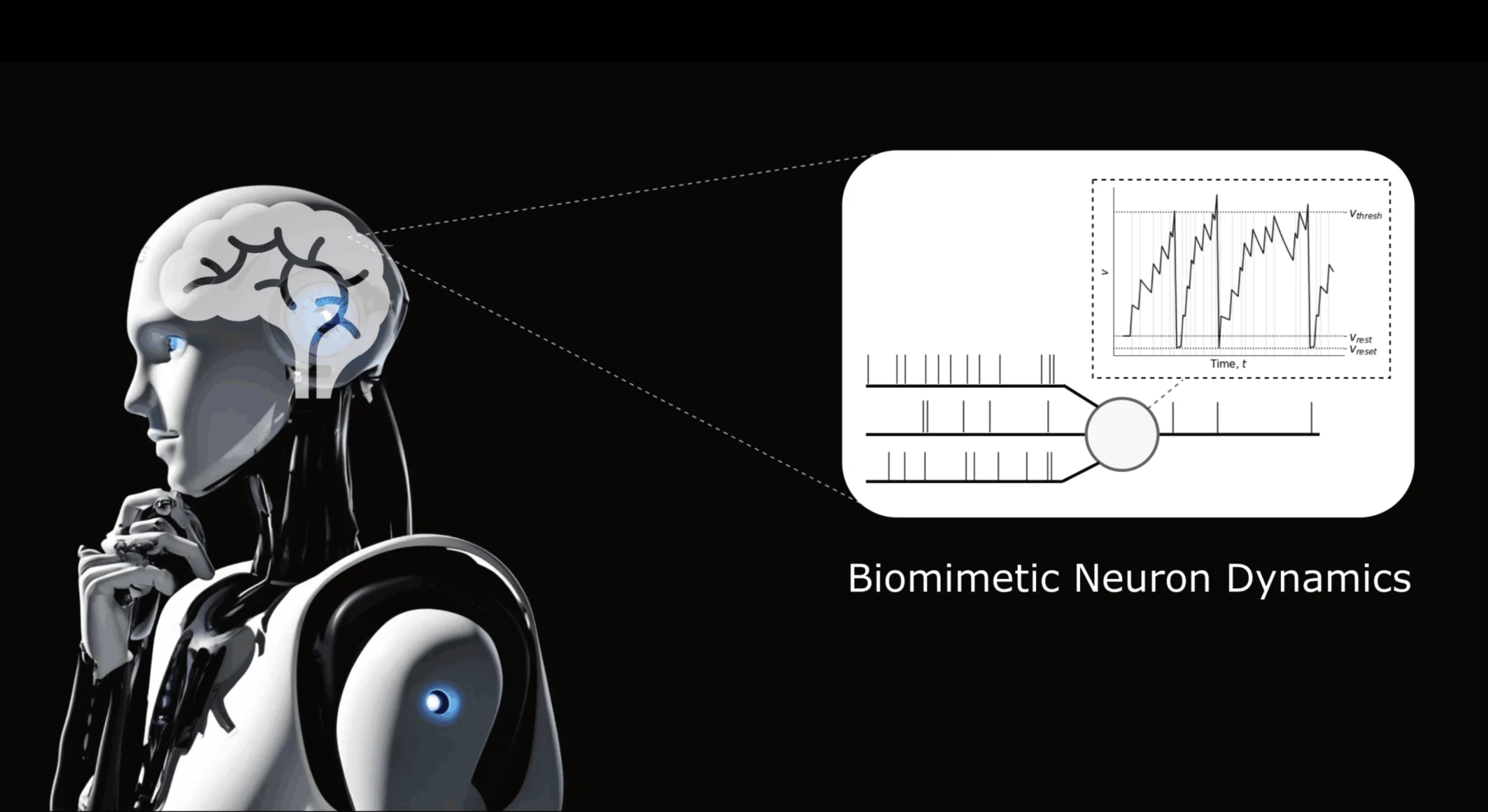

Consider a traffic routing application that recommends the fastest route. As more users follow the recommendation, congestion may build up along the suggested path, making it less efficient over time. Similarly, if a caseworker sees a jobseeker flagged by a model as high-risk and responds by allocating additional support, the individual’s outcome may improve—invalidating the original prediction. In both cases, the model’s output causally affects the outcomes it aims to predict. We refer to such forecasts as performative predictions (Perdomo et al., 2020) . The performative loop is depicted in the above figure (retrieved from a 2024 UAI tutorial by Mendler-Dünner & Zrnic, 2024).

Formalizing Performative Prediction

Performative prediction provides a framework for reasoning about such settings, where the model itself shapes future data. Formally, we consider a predictive model ![]() and introduce a distribution map

and introduce a distribution map ![]() , which associates model parameters

, which associates model parameters ![]() with the distribution over covariates and outcomes that emerges once the model is deployed.

with the distribution over covariates and outcomes that emerges once the model is deployed.

This leads to the concept of performative risk that accounts for this feedback. Rather than evaluating the model on a fixed data distribution, performative risk captures the loss after the model has influenced the environment and induced a new data-generating process. It is defined as:

![]()

Here, the model affects performance in two distinct ways: first, through its direct role in the loss function ![]() , and second, by shaping the distribution

, and second, by shaping the distribution ![]() from which future data is drawn.

from which future data is drawn.

Solution Concepts: Performative Stability and Optimality

In performative settings, two properties of models become relevant:

- Performative stability describes an equilibrium condition where the deployed model converges under retraining. That is, once the model is deployed and influences the data distribution, continued retraining on the newly observed distribution does not lead to further changes in the model. Formally, a performatively stable model satisfies:

meaning that the model is optimal under the distribution it induces. Importantly, performative stability does not imply performative optimality. A different model may exist that achieves lower risk on its own induced distribution, even if it is not a fixed point. In other words, a stable model need not be the best possible model. Determining whether retraining actually converges to a stable point requires assumptions about the nature of the distribution map![Rendered by QuickLaTeX.com \[\theta^\ast := \text{argmin}_\theta R(\theta, \mathcal{D}(\theta^\ast)),\]](https://zuseschoolrelai.de/wp-content/ql-cache/quicklatex.com-e2a961da47d320907489932497284138_l3.png)

and the loss function. For instance, if the loss function is strongly convex and sufficiently smooth, and the distribution maps bounds the sensitivity of the induced distribution to changes in model parameters, then iterative retraining is guaranteed to converge to a stable point.

and the loss function. For instance, if the loss function is strongly convex and sufficiently smooth, and the distribution maps bounds the sensitivity of the induced distribution to changes in model parameters, then iterative retraining is guaranteed to converge to a stable point. - Performative optimality means that the model achieves the minimal performative risk:

Optimizing for performative optimality is challenging due to the dependence on the distribution map![Rendered by QuickLaTeX.com \[\theta^\ast := \text{argmin}_\theta R(\theta, \mathcal{D}(\theta)).\]](https://zuseschoolrelai.de/wp-content/ql-cache/quicklatex.com-dd7625ba86c77f8ecc9368e15d70dd47_l3.png)

, which is often unknown or difficult to estimate. Broadly, optimization methods for performative risk can be categorized into two approaches:

, which is often unknown or difficult to estimate. Broadly, optimization methods for performative risk can be categorized into two approaches:- (1) Model-Free Approaches: These methods do not explicitly model the distribution map but instead rely on data collected from multiple deployments and general regularity conditions.

- (2) Model-Based Approaches: These approaches explicitly model the distribution map, often incorporating domain knowledge. For instance, they may assume that agents manipulate their data in response to deployment according to some utility-maximizing behavior. Such assumptions can lead to faster convergence by structuring the optimization problem around a known response function.

Outlook

Performative prediction is a relatively recent but rapidly growing area of research, with promising applications across a wide range of domains. For those interested in an entry point into the field, we recommend Hardt & Mendler-Dünner (2023) as a comprehensive overview of key concepts and developments.

Performative prediction has also inspired other theoretical notions, such as performative power, which provides a framework for analyzing the influence digital platforms exert through their algorithms. As machine learning systems increasingly shape the world around them, reasoning about performativity enables a formal study of their impact and the dynamic feedback loops they can create.

References

- Perdomo, J., Zrnic, T., Mendler-Dünner, C., & Hardt, M. (2020). Performative prediction. In International Conference on Machine Learning (pp. 7599-7609). PMLR.

- Hardt, M., & Mendler-Dünner, C. (2023). Performative prediction: Past and future. arXiv preprint arXiv:2310.16608.

- Mendler-Dünner, C., & Zrnic, T. (2024). Introduction to performative prediction [Conference tutorial]. Uncertainty in Artificial Intelligence (UAI) 2024.

- Fischer-Abaigar, U., Kern, C., Barda, N., & Kreuter, F. (2024). Bridging the gap: Towards an expanded toolkit for AI-driven decision-making in the public sector. Government Information Quarterly, 41(4), 101976.