Vision

relAI’s research mission is to train future generations of AI experts in Germany who combine technical brilliance with awareness of the importance of the reliability of AI. As a central component of the Munich AI ecosystem, relAI is committed to making artificial intelligence more safe, secure, responsible and protective regarding the privacy of individuals. It encourages responsible use to exploit AI’s vast potential for the benefit of humanity, to advance insight into and debate on machine learning including its ethical and societal dimensions, and to strengthen “AI made in Germany”.

Research projects are conducted by professors, fellows, visiting scholars, post-docs, PhD candidates and Master’s students in academic departments or research centers. Research dissemination is not only oriented toward the academic world but also key innovative industries such as medicine and healthcare, robotics, and interactive systems, as well as algorithmic decision-making.

Research Focus

The scientific program of relAI contributes to the end-to-end development of reliable AI, covering different branches of applied research on the basis of profound mathematical and algorithmic foundations. This theoretical grounding of AI applications is a distinguishing feature of relAI: Our conception of reliability involves the demand for a rigorous formal description of properties as well as provable guarantees, because only such guarantees will create the trust and confidence needed for an unreserved adoption of AI in practice.

Research Areas

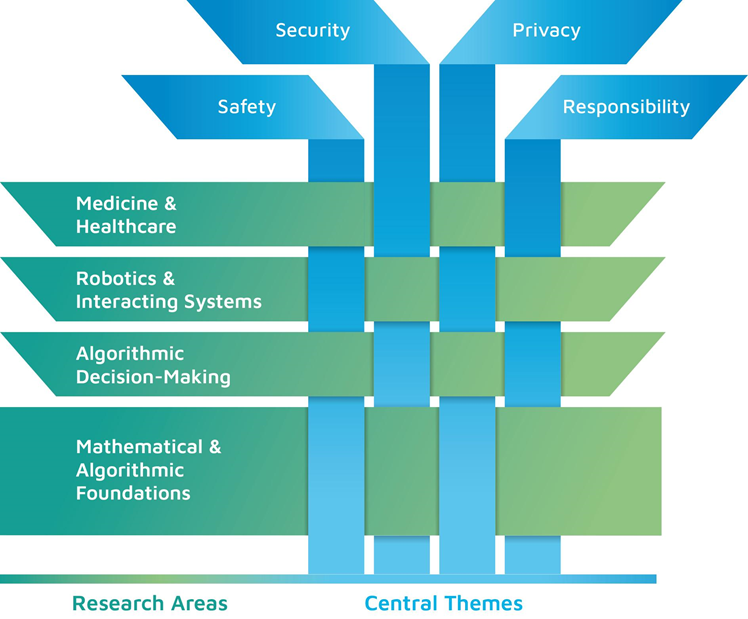

The research program combines mathematical and algorithmic foundations of reliable AI along with domain knowledge in three core application domains (as visualised in this figure): medicine & healthcare, robotics & interacting systems, and algorithmic decision-making. For these applications, which are of major importance for Germany, reliable AI methods are most urgently needed. Thus, the school’s research addresses a highly impactful and innovative topic with core societal demands in domains of public interest.

Central themes

Each of the school’s four research areas (green in figure above) covers central themes of reliable AI (blue in figure above):

- Safety, i.e., ensuring that AI systems (e.g., robots) do not cause any harm or danger.

- Security, i.e., making AI systems resilient against threats, external attacks, and information leakage, e.g., avoiding manipulation of decision-making systems against adversaries.

- Privacy, i.e., ensuring protection and confidentiality of (individual) data and information, such as medical AI systems incorporating sensitive patient data.

- Responsibility, i.e., developing AI systems taking societal norms, ethical principles, and the need of people into consideration, for example by making decisions understandable and protecting individuals against discrimination.

By combining foundational AI research with core applications, we realize a strong interdisciplinarity within relAI.