Distribution-free Uncertainty Quantification

Uncertainty quantification in machine learning (ML) and aritficial intelligence (AI) lies at the heart of trustworthy and reliable decision-making in real-world applications. While ML models are celebrated for their remarkable predictive capabilities, they often operate in high-stakes environments, where incorrect or overconfident predictions can have serious consequences. Prime examples of such high-stake applications include autonomous systems, medical diagnosis or financial forecasting. In such applications, understanding what a model does not know is just as crucial as understanding what it predicts. However, achieving reliable uncertainty quantification is a quite challenging task and gained huge interest in recent years (Hüllermeier & Waegeman, 2021) .

In this blog post, we will revisit one particular family of uncertainty quantification methods: conformal prediction. What makes conformal prediction remarkable is its ability to provide prediction intervals (or sets) that contain the unseen outcome with high probability - all while relying on minimal assumptions. Rooted in statistical theory, conformal prediction benefits from sophisticated theoretical foundations, and a seamless adaptability to practical settings.

What is conformal prediction?

The key idea behind conformal prediction (Vovk et al., 2005) is to construct prediction sets that are guaranteed to include the true outcome with high probability, such as 90% or 95%. Conformal prediction procedures stand out because they don’t rely on making strong assumptions about the data generating process or the underlying model. Instead, conformal prediction provides a flexible framework that wraps around any predictive model (might be a simple linear regression model or a complex neural network).

Suppose we are predicting the type of medical condition a patient might have based on their features, such as age, weight, and various test results. Instead of providing a single prediction, such as "Diabetes", conformal prediction produces a set of plausible outcomes - for instance, "{Diabetes, Hypertension, Heart Disease}" (indicating uncertainty) or just "{Diabetes}" (indicating high confidence).

Prediction sets produced by conformal procedures come with marginal [1] validity guarantees at a user-defined confidence level, all while requiring minimal assumptions. Specifically, the only assumption underlying conformal prediction is that the training data and the test point are exchangeable (i.e., they are drawn from the same distribution, and their order does not matter), which is a weaker assumption than i.i.d. and can often be justified in practical scenarios.

[1] A note of caution: the coverage property is referred to as marginal because the probability is over the randomness in both the training data and the test point.

A simple classification task

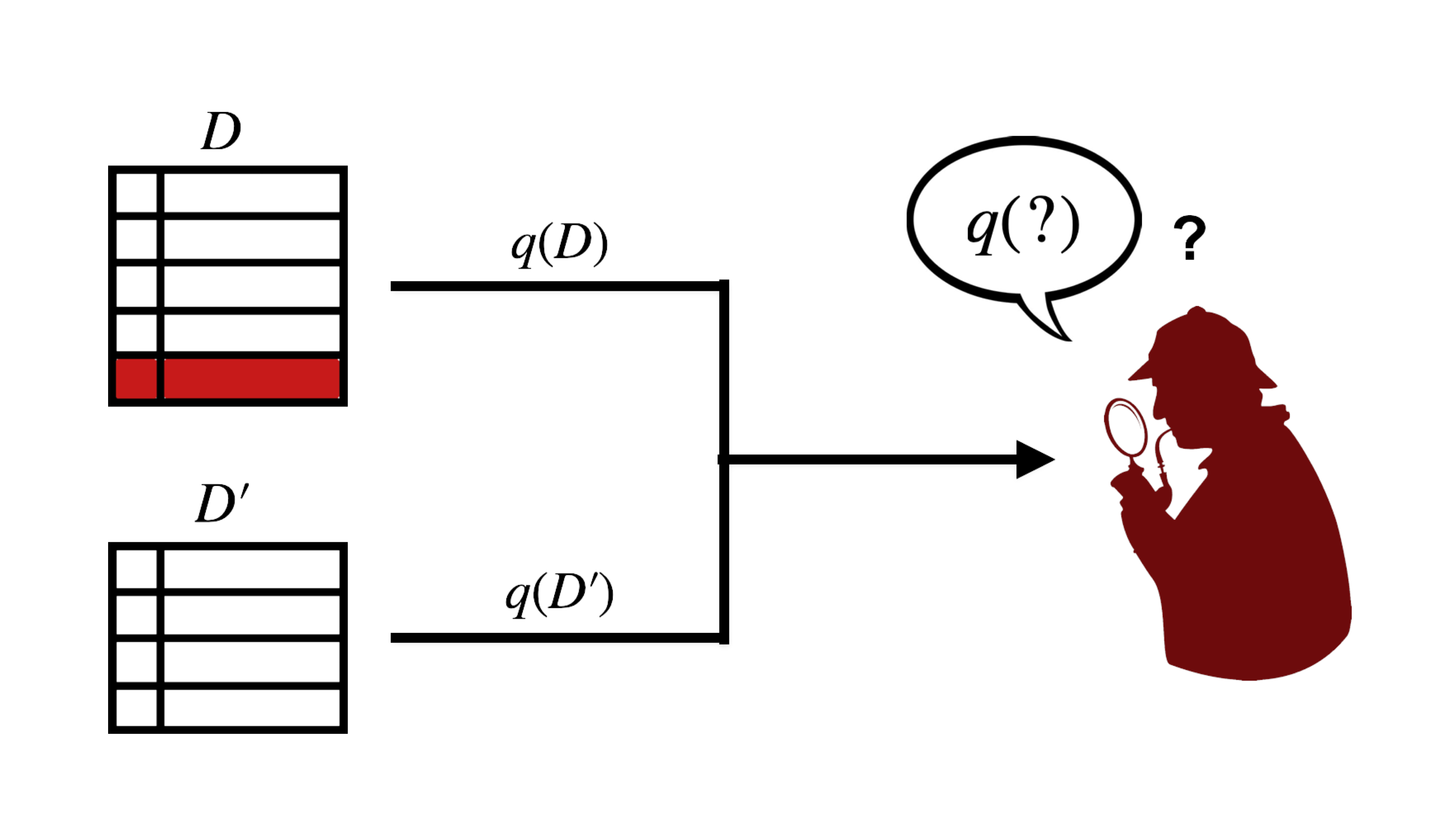

To illustrate how conformal prediction works in its essence, let's consider the example illustrated in the figure above.

The task is to classify a new data point (gray) into one of three possible classes (red, yellow, blue). Recall that, in a standard classification setting, a model typically produces a single point prediction. For example, such a model might decide that the gray point belongs to the yellow class.

Conformal prediction can be applied as follows: first, we hypothesize a label for the unseen outcome, i.e., in our example we pick ![]() in

in ![]() . In other words, we assume, for example, that the true, unseen class for the gray point is yellow. Based on this hypothesis, we augment the training data by adding the test feature

. In other words, we assume, for example, that the true, unseen class for the gray point is yellow. Based on this hypothesis, we augment the training data by adding the test feature ![]() along with the hypothesized label

along with the hypothesized label ![]() . Using the augmented dataset, we then train a (probabilistic) model of our choice. Given the trained model, we compute so-called non-conformity scores for both the training data

. Using the augmented dataset, we then train a (probabilistic) model of our choice. Given the trained model, we compute so-called non-conformity scores for both the training data ![]() and the test point

and the test point ![]() , where

, where ![]() is the hypothesized label for the gray data point. A common and intuitive way to define non-conformity scores in classification tasks is to use

is the hypothesized label for the gray data point. A common and intuitive way to define non-conformity scores in classification tasks is to use ![]() , where

, where ![]() is the predicted conditional probability of

is the predicted conditional probability of ![]() given

given ![]() , provided by the probabilistic classifier. This score captures how "unlikely" the model considers a particular label

, provided by the probabilistic classifier. This score captures how "unlikely" the model considers a particular label ![]() for a given input

for a given input ![]() . For the training data, we calculate scores

. For the training data, we calculate scores ![]() where

where ![]() is the predicted probability for the observed class

is the predicted probability for the observed class ![]() . Similarly, for the test point

. Similarly, for the test point ![]() the score is

the score is ![]() .

.

To determine whether the hypothesized label ![]() should be included in the prediction set for the gray data point, we compare the test score

should be included in the prediction set for the gray data point, we compare the test score ![]() to a threshold determined from the non-conformity scores of the training data. This threshold is chosen such that the marginal validity guarantee holds at the desired confidence level. For instance, if we aim for 90% confidence, the threshold corresponds to the 90th percentile of the training data’s non-conformity scores. If the score

to a threshold determined from the non-conformity scores of the training data. This threshold is chosen such that the marginal validity guarantee holds at the desired confidence level. For instance, if we aim for 90% confidence, the threshold corresponds to the 90th percentile of the training data’s non-conformity scores. If the score ![]() is less than or equal to this threshold, we include

is less than or equal to this threshold, we include ![]() in the prediction set. Otherwise,

in the prediction set. Otherwise, ![]() is excluded, as it is deemed too "non-conforming" to be a plausible label for the gray data point.

is excluded, as it is deemed too "non-conforming" to be a plausible label for the gray data point.

By iterating through all possible labels ![]() in

in ![]() , we construct the final prediction set. In classification problems, this process is straightforward due to the finite number of candidate labels. In regression problems, while the label space is continuous, efficient algorithms exist to construct prediction sets.

, we construct the final prediction set. In classification problems, this process is straightforward due to the finite number of candidate labels. In regression problems, while the label space is continuous, efficient algorithms exist to construct prediction sets.

Returning to our example, the prediction set for the gray point is ![]() . This prediction set indicates the model's uncertainty: both yellow and blue classes were sufficiently conforming to the training data to remain in the prediction set, reflecting that the gray point could plausibly belong to either class.

. This prediction set indicates the model's uncertainty: both yellow and blue classes were sufficiently conforming to the training data to remain in the prediction set, reflecting that the gray point could plausibly belong to either class.

Discussion

The only assumption required for marginal coverage is that the training data and the test point are exchangeable. Marginal coverage refers to the property that the prediction intervals contain the true outcome with a specified probability on average over the entire data, both the training set and the test point. This guarantee holds irrespective of the choice of model or the underlying data-generating process. Another critical point to consider is that, although marginal coverage is always guaranteed, a poorly performing model or an inappropriate choice of non-conformity score can result in uninformative prediction sets. Specifically, the sets may become excessively large, offering little practical value. To evaluate prediction intervals effectively, one typically considers metrics such as coverage, ensuring the intervals contain the true outcome with the desired probability, and efficiency, which reflects how narrow the intervals are while still maintaining the required coverage.

The procedure described above is commonly known as full (or transductive) conformal prediction, which can be computationally demanding due to the need to retrain the model for each hypothesized outcome. A more computationally efficient alternative is split (or inductive) conformal prediction (Papadopoulos et al., 2002). This approach avoids retraining the model multiple times by splitting the data into separate training and calibration sets. Further details can be found in the additional resources below.

A non-exhaustive list of resources

Finally, we provide a selection of resources that, while not exhaustive, may serve as a helpful starting point for readers interested in exploring conformal prediction and related topics.

- Awesome-conformal-prediction:

A professionally curated list of awesome Conformal Prediction videos, tutorials, books, papers, PhD and MSc theses, articles and open-source libraries (all ressources listed here, and many more, can be found here). - Algorithmic learning in a random world:

A foundational book by Vladimir Vovk, Alex Gammerman, and Glenn Shafer, the pioneers of conformal prediction, offering a comprehensive introduction to its theory and principles. - A Gentle Introduction to Conformal Prediction:

A gentle introduction to conformal prediction and distribution-free uncertainty quantification, with clear and accessible explanations for a broad audience. - Theoretical Foundations of Conformal Prediction::

A recent (and still evolving) book on the theoretical foundations of conformal prediction.

Hüllermeier, Eyke, and Willem Waegeman. "Aleatoric and epistemic uncertainty in machine learning: An introduction to concepts and methods." Machine learning 110.3 (2021): 457-506.

Papadopoulos, Harris, et al. "Inductive confidence machines for regression." Machine learning: ECML 2002: 13th European conference on machine learning Helsinki, Finland, August 19–23, 2002 proceedings 13. Springer Berlin Heidelberg, 2002.

Vovk, Vladimir, Alexander Gammerman, and Glenn Shafer. Algorithmic learning in a random world. Vol. 29. New York: Springer, 2005.